The DroneVisionControl application

Have you ever wanted a drone that could follow you around or respond to your hand gestures? That’s exactly what I set out to do with this project! Using the PX4 autopilot platform and some popular computer vision tools, I created a system that lets a drone track a person or respond to gestures in real time.

The project combined software development, hardware tinkering, and simulation to explore how vision-based control can make drones smarter and more interactive.

This application was developed as the Final Year project for my bachelor in Aerospace Engineering. The project report is hosted in the university’s archive.

Key Features

- Follow Mode: The drone uses a camera to track a person and follow their movements automatically.

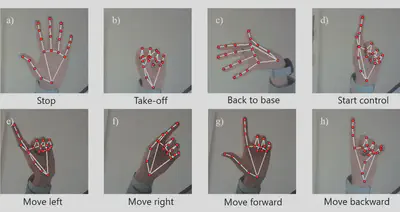

- Gesture Control Mode: Simple hand gestures can control the drone’s movement, like telling it to move forward or turn.

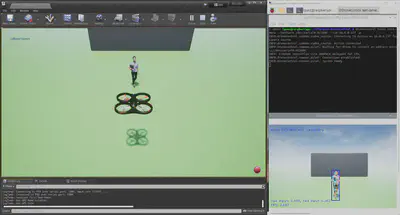

- Simulation and Flight Tests: Developed a platform to test control algorithms in a virtual environment (built on Unreal Engine) before trying it out on a real drone.

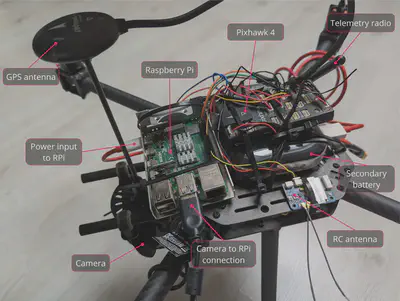

- Low-Cost Hardware: Built using affordable components like a Raspberry Pi and a basic webcam.

Tech Stack

- Languages: Python

- Tools: PX4 (autopilot), Google’s MediaPipe (for gesture detection), OpenCV (for image processing)

- Simulation: AirSim + Unreal Engine

- Hardware: Pixhawk 4, Raspberry Pi 4, Logitech C920

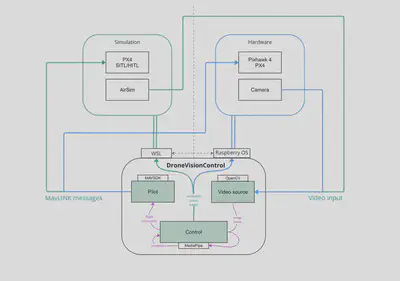

Software architecture

Showcase

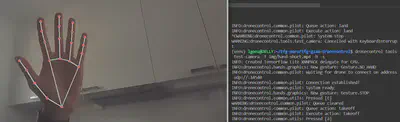

Hand gesture control

Available gestures

Interface

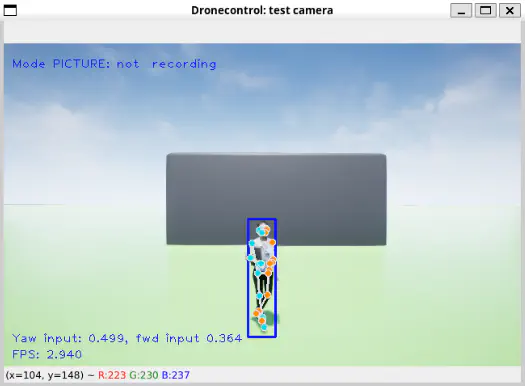

Person follow control

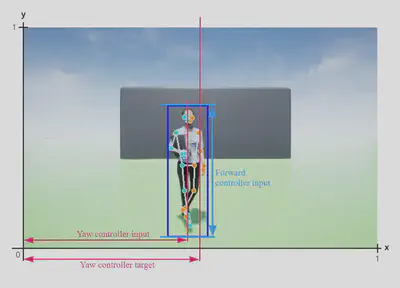

Inputs for drone control

Interface

Development Journey

Phase 1: Prototyping

I started with a simple idea: controlling the drone with hand gestures. Using MediaPipe for gesture detection and the PX4 platform for flight control, I created a prototype and tested it in a simulator called AirSim, built on top of Unreal Engine.

Phase 2: Human Tracking

Next, I upgraded the system so the drone could follow a person. The camera detects the person’s position, and the drone adjusts its flight to keep them centered in its view.

Phase 3: Real-World Testing

Once everything worked in the simulator, I built a custom quadcopter and tested it outdoors. After a lot of tweaking, the drone successfully followed people and responded to gestures in real flight!

See It in Action

Want to explore more?

The hardware

What’s Next?

I’d love to expand on this project with:

- Better Tracking: Improving detection in challenging conditions like low light.

- Obstacle Avoidance: So the drone can safely navigate around objects.

- Multi-Drone Support: Imagine a team of drones working together!

This project was such a fun way to explore what’s possible with drones and computer vision. It’s a mix of tech, creativity, and problem-solving.